Connecting Azure Data Factory to FTP servers with Private IP and using Logic App to run ADF Pipelines

Hot to use Azure Data Factory Service to connect to FTP by Static IP and orchestrate the ADF pipeline via Logic App

Business Case:

Almost all FTP servers in enterprises are protected and behind firewalls. Firewalls are great to protect and safeguard the infrastructures from unauthorized access and malicious attacks. This is creating a constraint for users and services who are trying to access the FTP (SFTP). Most of the time FTP servers only allow access to specific static IPs and ports.

This brings the problem on how to resolve the access to FTP from various Azure Services. Most of Azure PaaS Services are exposed by Azure Region IP Ranges. Customers are not willing to open a wide range of IPs for Azure services and that’s were the challenge becomes interesting.

Scenario:

The scenario I’m going to describe in this article is a very common case for accessing FTP server from Azure Data Factory (ADF) service.

My customer need to do daily file drops into a 3rd party organization which uses FTP server. 3rd party organization only allows specific IP access to their FTP server. My customer currently is using on-premise servers and they are moving their operation to Azure, we need to migrate the FTP process into Azure as-well.

Solution:

We choose ADF Pipelines to build the export files and we are going to use FTP task in ADF to copy the files. In order to be secure we need to have one Static IP that customer can open the firewall for accessing.

ADF runtimes are either auto-resolve or Self Hosted.

1-ADF with Auto-resolving Integration Runtime

Reference: Azure Data Factory IP range for AZ Regions

2-ADF Self Hosted Integration Runtime server with Reserved Static IP

Reference: How to create self hosted Azure ADF runtime

We Built our own VM in Azure and installed the ADF Run time agents on it and connect to ADF Runtime environment.

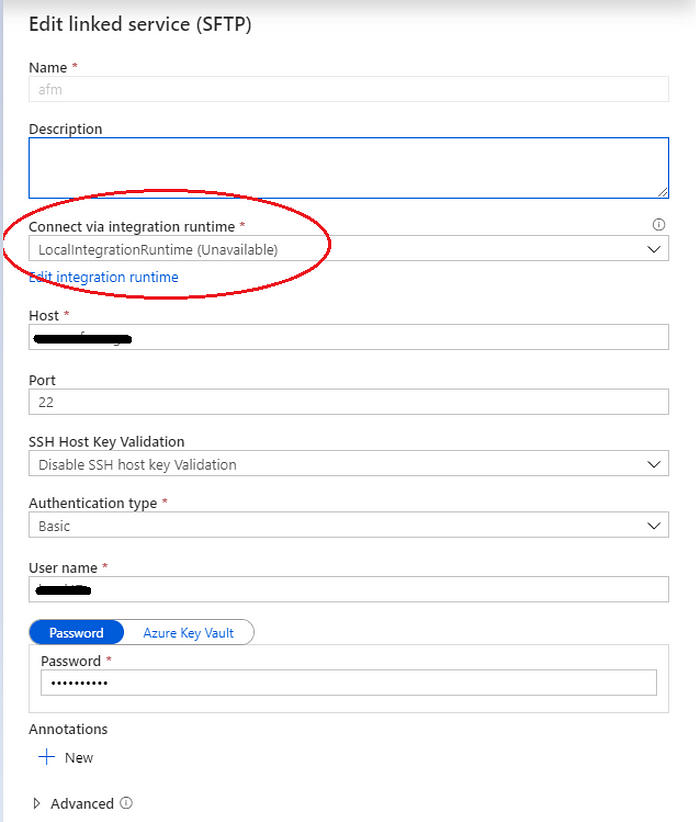

After building out the VM and connecting to ADF IR we will have a new runtime server (below is the example, server is unavailable since the VM is stopped)

3-Optimizing the runtime and cost for ADF with self hosted server

Now that we know how to access the FTP server via private IP we need to learn how to optimize the cost and process for executing a pipline in ADF. Obviously we can keep the ADF Integration Runtime Server online 24/7 but most of the times we only need the server for a specific period of time. For that matter we can control on when to run the VM and execute the ADF pipelines based on our desired schedule. This workflow will look like ethis:

- Start ADF IR server

- Run ADF Pipeline

- Stop ADF IR server

Starting the IR server and waiting for warming up and establishing the IR connection is a time consuming process and depends on VM types/sizes and environment.

4-Starting and Stopping VM via webhooks

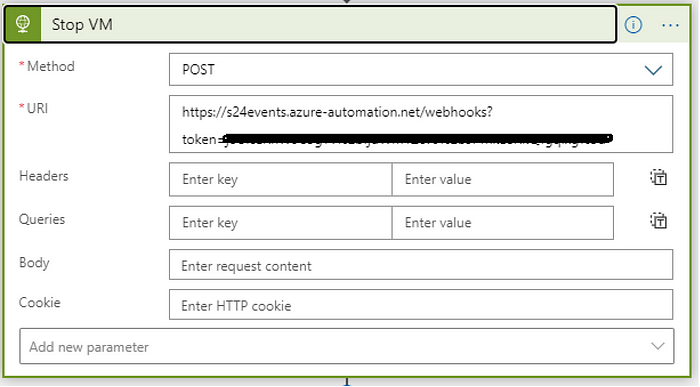

In order to Start or Stop VM we can use Azure Automation and Runbooks to automate and expose webhooks for Starting and Stopping VM.

Reference: Azure Automation

Reference: Create a Graphical Runbook

Reference: Azure automation via webhooks

5-ADF Pipeline running over ADF IR server

Back to my ADF, I built two pipelines, first one to export the file into Azure Blob and the second to FTP the file to 3rd party server. I wrapped both pipelines in one single pipeline for execution.

The important piece is to use Self Hosted Integration Runtime server for FTP connection. This will allow to expose only one static IP for the VM

Connecting Azure VM in ADF Integration Runtime

6-Logic App to the rescue

We have all the pieces now and need to glue them together and build a workflow to execute them in order. Azure Logic App is a great service for this purpose and I’m going to take advantage of it.

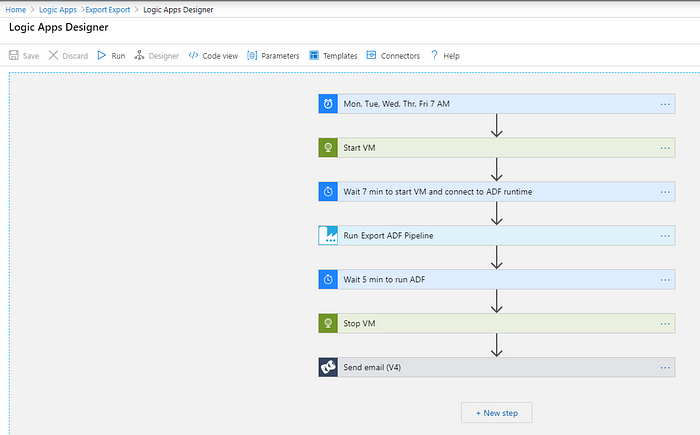

I built a logic app with the following workflow you see here.

- Trigger every weekday at 7:00 AM

- Start ADF IR VM over the webhook

- Wait 7 min to start the VM and connect to ADF Runtime

- Run ADF Pipeline

- Wait 5 min to complete the Pipeline execution

- Stop ADF IR VM

- Send confirmation Email

Let me describe each in more detail.

Trigger: In order to start a LogicApp we need a trigger, I’m using time trigger that runs on specific details

Start VM: Using the webhook (with Web Post operation in Logic App) that I created in Azure Automation Runbook

Wait: 7 min , I found out that it takes few min for VM to start and then another few minutes for ADF runtime to connect to VM, so I’m waiting for that here.

Run ADF Pipeline: We execute the ADF pipeline here

Wait: to complete the Pipeline

Stop VM: Using webhook to Stop VM

Email Confirmation: Sending email via SendGris Service

Reference: Azure SendGrid

Logic App Run history: Finally I can run my logic app and review the execution history.

Conclusion:

Most of the time building workflows with multiple services in Azure is required to develop a complete solution. Understanding the capabilities and limitation of each service is the key to be able to build and deploy a reliable and resilience solution. In this article I tried to demonstrate the different bits and pieces of Azure Services and how I utilized each to build my solution. I hope you find this helpful.